Is AI cheating in education, or is it simply another tool like a calculator or spellchecker? That’s the debate playing out in classrooms, policy meetings, and even courts.

AI is finding its way into nearly every aspect of learning, but its role in schools sparks heated controversy. Some see it as academic dishonesty, while others argue it can support deeper learning if used responsibly.

TL;DR

- AI in schools is controversial. Many ask: is AI cheating in education, or just a new tool?

- A lawsuit in Massachusetts shows the real-world consequences of unclear AI policies.

- Research is mixed. Generative AI reduces workload but may weaken critical thinking.

- The real challenge is balancing AI as an assistant versus AI as a creator.

- Ethical use matters. Transparency and fairness can prevent misuse while embracing innovation.

A Case Study: When AI Meets Academic Policy

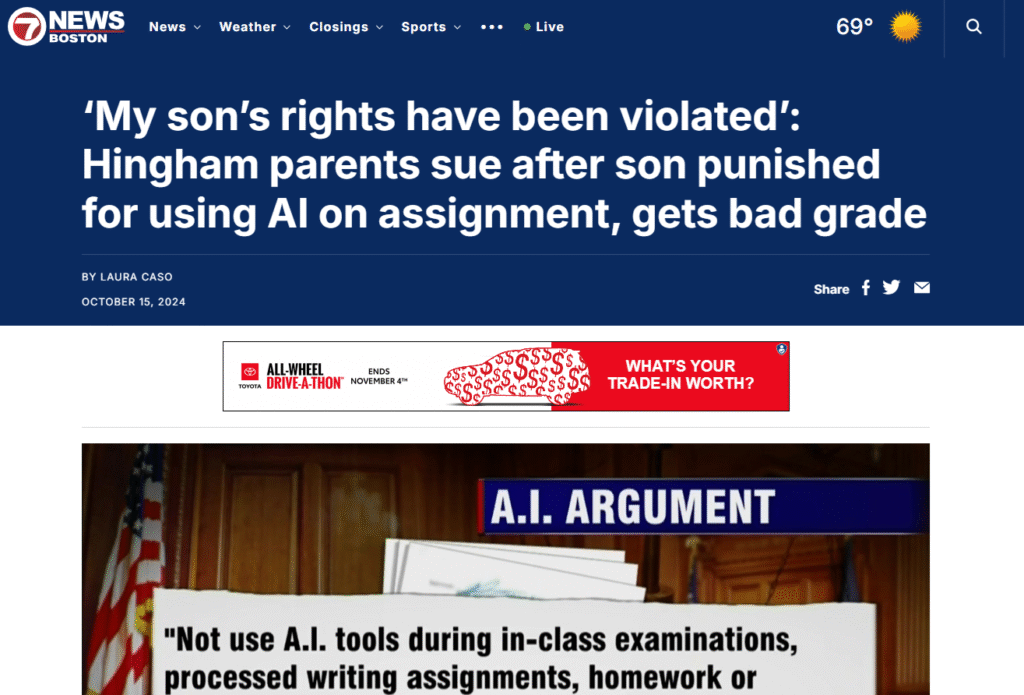

In Hingham, Massachusetts, parents are suing a school district after their son was penalized for using AI to generate notes for a history paper outline. They argue the policy against AI was added after the paper was submitted, while the school says it followed its handbook.

This case captures the big question: when is AI in education a helpful tool, and when is it considered cheating? The outcome will likely shape how other districts address AI in schools.

The Line Between Assistance and Creation

Is AI Cheating in Education When It Creates Instead of Assists?

Generative AI tools such as ChatGPT can brainstorm ideas, summarize sources, and draft outlines. Research shows that these tools reduce cognitive load, allowing students to work faster.

But studies also find that over-reliance on AI leads to weaker reasoning and critical thinking. Assistance enhances learning, but full AI creation risks replacing human thought.

Examples of AI assistance:

- Brainstorming essay ideas

- Organizing research notes

- Automating repetitive tasks

Examples of AI creation (where it crosses the line):

- Writing full essays

- Generating complete assignments

- Replacing student analysis

This distinction is at the heart of whether AI counts as cheating.

Shifting Norms: From Spellcheck to AI

What Past Tools Teach Us About AI in Schools

History shows that technologies once seen as cheating later became normalized in education.

- Spellcheckers were criticized for undermining grammar, now they’re essential.

- Calculators were once banned in math classes, now they’re standard.

- Search engines were dismissed as shortcuts, now they’re core to research.

So, is AI cheating in education, or is it just the next step in this evolution? Many experts argue that schools need updated policies rather than outright bans.

The Role of Ethics and Transparency

Ethical AI Use in Education

At its core, the ethics of AI use in schools depend on transparency, credit, and integrity.

- Transparency: Students should disclose when and how they used AI.

- Credit: AI should not replace the student’s own work.

- Integrity: Learning must remain the priority.

In creative fields, disclosure is already common. Some artists note when AI tools were used. Soon, we may see the reverse: people proudly stating “No AI was used.”

For a deeper dive, see Fast Company’s exploration of AI ethics in creative industries.

Is AI Really Cheating, or Just Evolving?

Perhaps the better question isn’t is AI cheating in education, but rather, how should we redefine cheating in a world with AI?

- In classrooms, AI should enhance, not replace, critical thinking.

- In workplaces, AI should increase productivity without eroding accountability.

- In society, AI needs guidelines and norms to ensure fair use.

AI is powerful, and whether it becomes a shortcut or a tool for growth depends on how we use it.

Pro Tip: Use AI to Learn, Not Just to Finish

Students can use AI responsibly by treating it as a study partner:

- Generate practice questions, then solve them independently.

- Summarize sources with AI, then expand with personal analysis.

- Let AI suggest outlines, but fill in arguments with original thought.

This balance ensures AI supports learning without replacing it.

Conclusion: Redefining Fair Play in Education

So, is AI cheating in education? Not necessarily. The answer depends on how AI is used.

When it supplements learning, AI can unlock new opportunities. When it replaces effort, it undermines growth. Schools, parents, and students must work together to set fair guidelines.

The challenge isn’t eliminating AI, but learning to wield it ethically and effectively.

FAQs About AI and Cheating in Education

1. Is using AI to write essays considered cheating?

In most schools today, yes, unless the policy allows it.

2. Can AI improve learning instead of hurting it?

Yes. Used as a support tool, AI helps with organization and brainstorming while keeping the hard thinking for students.

3. Is AI cheating in education if it only generates outlines?

Not necessarily. Outlines can support learning, but the student should build on them independently.

4. Will AI become accepted in schools like calculators did?

Most likely. Over time, AI may become as standard as spellcheckers and search engines.

5. How can students use AI responsibly?

By being transparent, using it as assistance rather than replacement, and focusing on their own learning.

Related content you might also like:

- AI Tools in Education: How Study Mode Helps Students Learn

- The Ethics of Autonomy

- Live, Continuous Testing in Higher Education – How Tech is Changing the Way We Teach

- The Fed’s Growing Balance Sheet