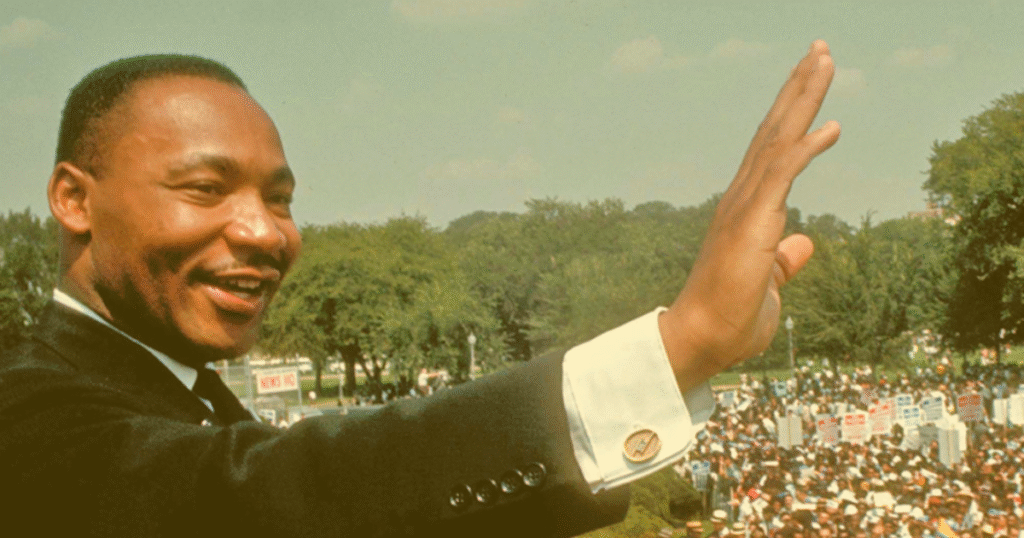

Synthetic media regulation is emerging as a key frontier in the age of AI. When Sora 2, the video-generation model from OpenAI, came under fire for unauthorized depictions of celebrities and historical figures, including Martin Luther King Jr., the industry paused and asked how we rebuild rules. Pausing MLK Jr. content was a strong initial signal: consent and context matter when technology meets legacy. This moment underscores that synthetic media regulation is not optional, it is essential.

TL;DR

The widescale public use of synthetic media is still very new. Pausing depictions of MLK Jr. on Sora 2 while new guardrails are built is a prudent move. It shows consent, context, and oversight must come before mass deployment of deepfake technologies.

Synthetic Media Regulation and the MLK Jr. Pause

OpenAI announced it would stop generating videos depicting MLK Jr. on Sora 2 after the Martin Luther King Jr. estate raised objections. This decision points to the core of synthetic media regulation: when public figures or their estates say “no,” technology must listen. The pause sent a clear signal that even revered leaders require protection from misuse of their likeness.

Synthetic Media Regulation Needs Consent and Context

Allowing anyone to generate clips of deceased celebrities without limits is risky. Synthetic media regulation demands that lakes of data and likenesses meet clear standards: permission from the estate, transparent labeling, and restricted usage to contexts like education or documentary work. When estates aren’t equipped to monitor each platform or video generation system, the burden becomes unfair and unmanageable. Estates of smaller figures may lack resources to enforce rights across jurisdictions.

Synthetic Media Regulation in Estate-Rights Architecture

In the U.S., post-mortem publicity rights are inconsistent. States like California, Tennessee, and Indiana grant protections that allow estates to control likenesses for decades; others like New York offer only limited rights. California’s new law even prohibits unauthorized AI-generated replicas of deceased individuals in audiovisual works. That patchwork makes synthetic media regulation complex for platforms such as Sora 2. The proposed NO FAKES Act aims to provide a national solution.

Synthetic Media Regulation and Platform Responsibility

Platforms that deploy tools like Sora 2 must build explicit safeguards. OpenAI’s “Launching Sora Responsibly” document outlines watermarking, consent-based likeness usage, and metadata tagging. But regulation goes beyond tech: the rules should reflect power, access, and fairness between large estates and lesser-known personalities.

Synthetic Media Regulation and Industry Impacts

The pause on MLK Jr. deepfakes reflects a broader shift. The entertainment, technology, and policy sectors are now asking: who gets to appear in AI-generated media, and under what terms? The guardrails we build now will determine how synthetic media delivers value, not exploitation.

FAQs

What is synthetic media regulation?

It refers to frameworks and rules governing how AI-generated content uses real people’s likenesses, voices, or identities.

Why was pausing MLK Jr. content important?

Because misuse of his likeness highlighted how quickly synthetic media can distort legacy, making regulation urgent.

What protective rights exist for deceased individuals?

Some U.S. states grant post-mortem publicity rights, but coverage is inconsistent, hence the need for national regulation like the NO FAKES Act.

What should platforms require before allowing AI recreations of people?

Verified consent from estates, transparent labeling of AI content, traceable provenance, and context-specific usage (documentary, education, etc.).

How does this affect smaller estates?

Smaller estates often lack the legal or financial resources to monitor or enforce rights, making default restrictions and platform-level protections critical.

Conclusion

Synthetic media regulation matters now because the tools are powerful and the risks real. Pausing MLK Jr. depictions on Sora 2 is a wake-up call that legacy, context, and consent must shape the future of AI-generated media. The right order of operations is clear: remove risky content, then build new policies. Our digital future depends on rules we trust.

Related content you might also like:

- Deepfakes: Trust and Risks of AI-Synthesized Faces

- UBS Bets on Synthetic Communication

- Rethinking the Context of Connectivity

- Shein Store Paris: What It Means for Fast Fashion and Retail

- Wayfair Bets on AI to Boost Home Shopping: Will It Work?